Performance

Measuring the quality of a system is a classic engineering question.

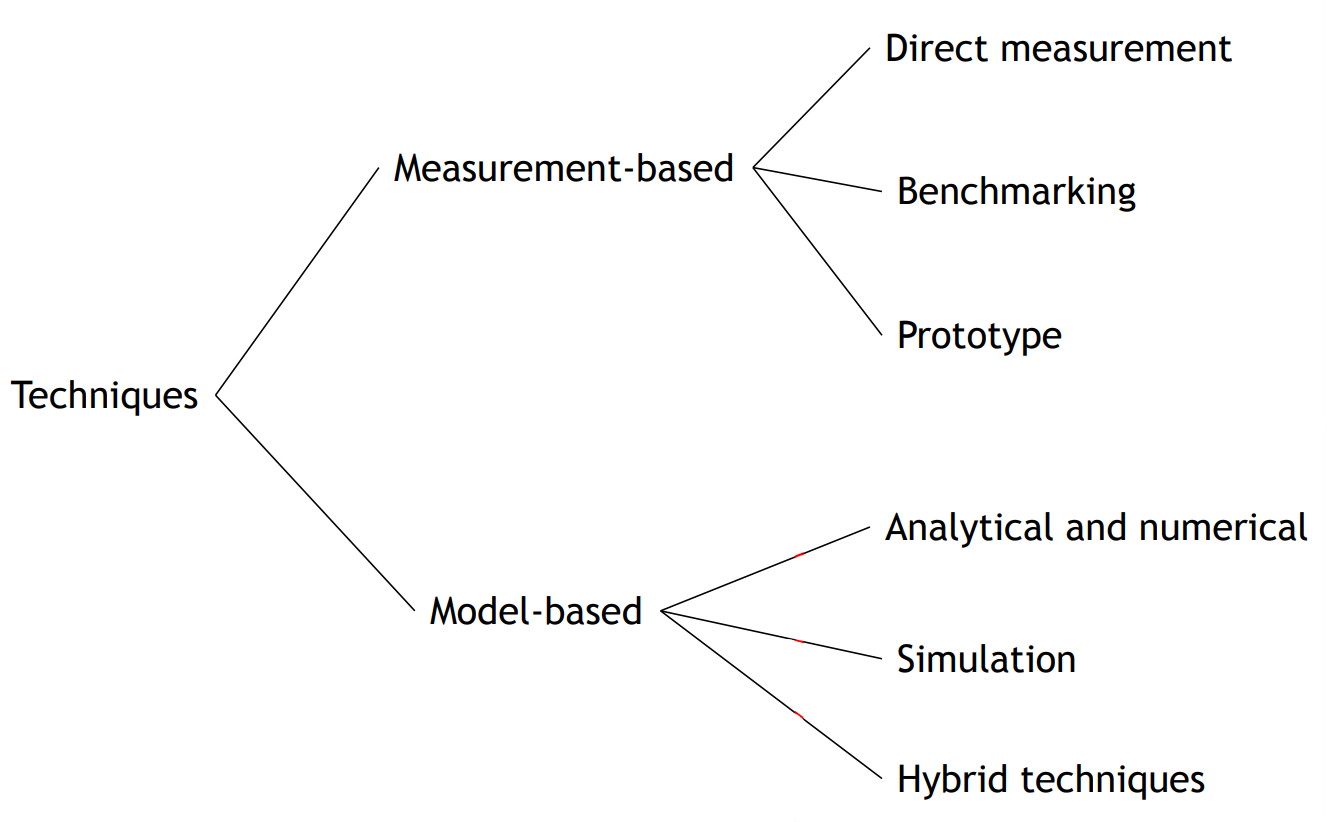

Possible models that we will se are:

- Queueing networks

Queueing networks

Queueing theory is the theory behind what happens when you have a lot of jobs, scarce resources, and so long queue and delays. Examples of queues in computer systems:

- CPU uses a time-sharing scheduler

- Disk serves a queue of requests waiting to read or write blocks

- A router in a network serves a queue of packets waiting to be routed

- Databases have lock queues, where transactions wait to acquire the lock on a record

Different aspects characterize queuing models:

- Arrival: frequency of jobs is important: the average arrival rate (req/s).

- Service: the service time is the time which a computer spends satisfying a job: the average duration and is the maximum service rate.

- Queue: jobs or customers which cannot receive service immediately must wait in a buffer.

- Population: we can both have members of the population indistinguishable or divided into classes, whose members differs in one or more characteristics, for example, arrival rate or service demand.

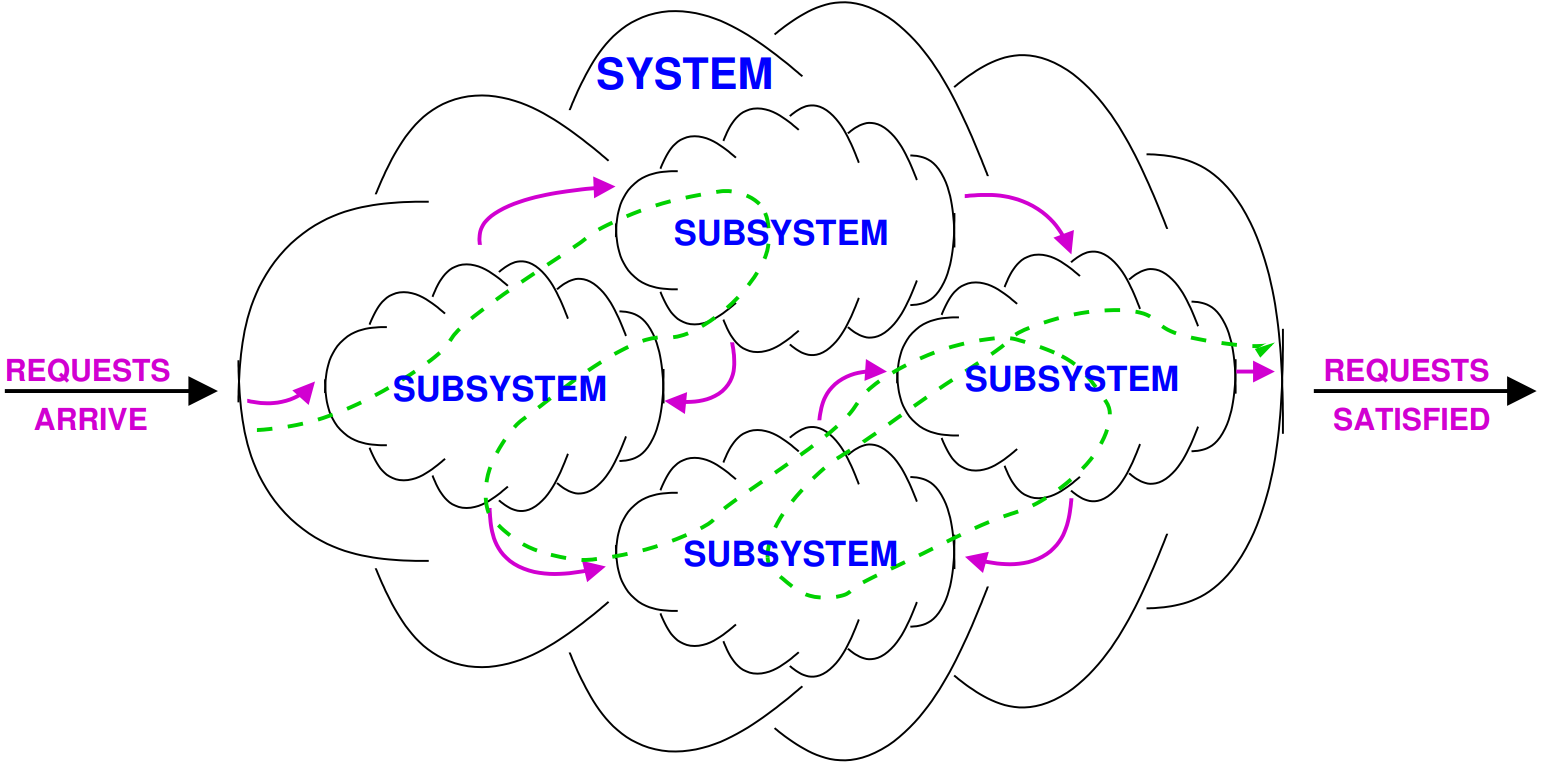

- Routing: the policy that describes how the next destination is selected after finishing service at a station must be defined. It can be:

- probabilistic: random destination

- round robin: the destination chosen by the job rotates among all the possible ones

- shortes queue: jobs are moved to the one other subsystem with the mallest number of jobs waiting

{width=50%}

{width=50%}

Operational laws

Operational laws represent the average behavior of any system, without assumptions about random variables. They are simple easy to apply equations.

If we observe a system we might measure these quantities:

- the length of time we observe the system

- the number of request arrivals we observe

- the number of request completions we observe

- the total amount of time during which the system is busy

- the average number of jobs in the system

- the average time a request remains in the system (also called residence time). It corresponds to the period of time from when a user submits a request until that user’s response is returned.

- of a sub-system is the response time and it’s the average time spent by a job in when enters the node.

- is the think time which represents the time between processing being completed and the job becoming available as a request again, basically the time where in interactive systems the app is waiting the user.

From the quantities we can derive these equations:

- , the arrival rate

- , the throughput or completion rate where

- , the utilization

- , the mean service time per completed job. Note that is the average time that a job spends when IT IS SERVED.

- which is useful in situations like “consider a serving rate of where each serving need time to be completed”.

- which is called the little law.

- in case of interactive systems.

- is the visit count of sub-system in the system with as completion rate. It’s possible that when there are “loops” in the model

- is the force flow law and it captures the relationship between sub-system with the system.

- is the service demand and it represents the total time that the sub-system is needed for a generic request. It’s a crucial characteristic of the sub-system/resource.

- is the time spent by a job in a service station, counting the queue time.

- is the residence time and is the time spent by a job in a service station during all system time: so accounting the visits of the component.

- is the utilization of a resource/sub-system.

If the system is job flow balanced which means that . Also the above formulas can also be applied to a single sub system .

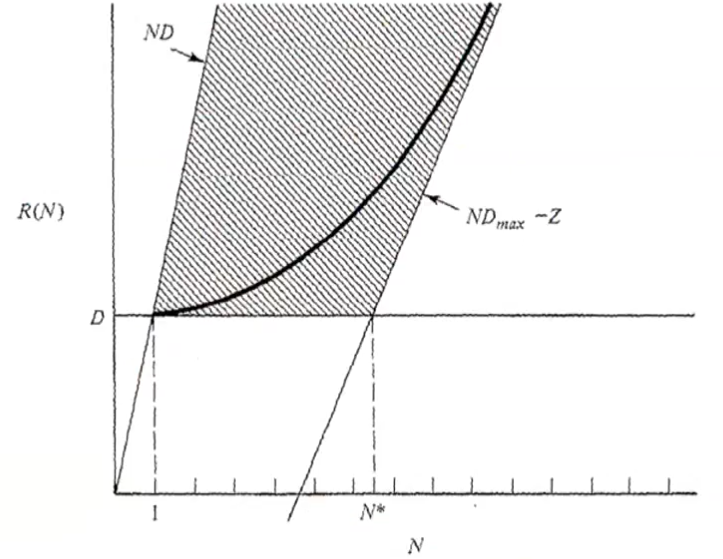

Performance bounds

We only consider single class systems and determine asymptotic bounds on a system’s performance indices. Our focus will be on highlighting the bottleneck and quantify its impact.

The considered models and the bounding analysis make use of the following parameters:

- , the number of service centers

- , the largest service demand at any single center

- , the average think time, for interactive systems

And the following performance quantities are considered:

- , the system throughput

- , the system response time

We distinguish between open model and closed model.

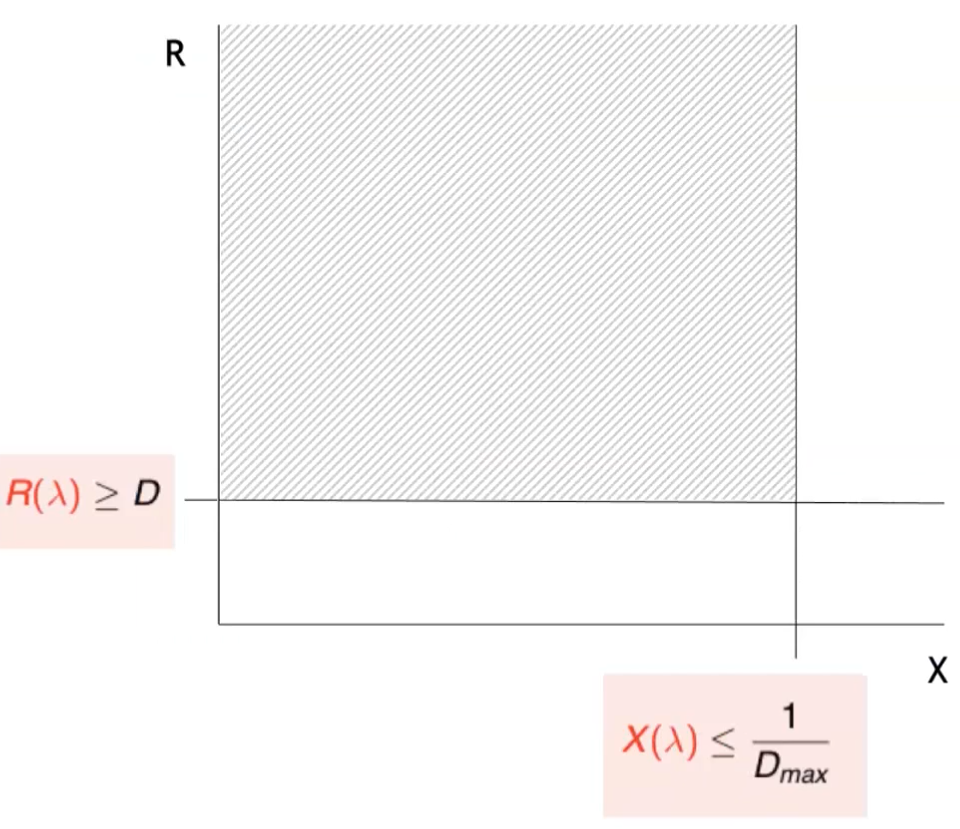

Open Models

In open models we have the following bounds:

which is essentially derived from these relations:

And the for the response time:

The upper bound for cannot be defined while minimum response time is the sum of all the demands of all components..

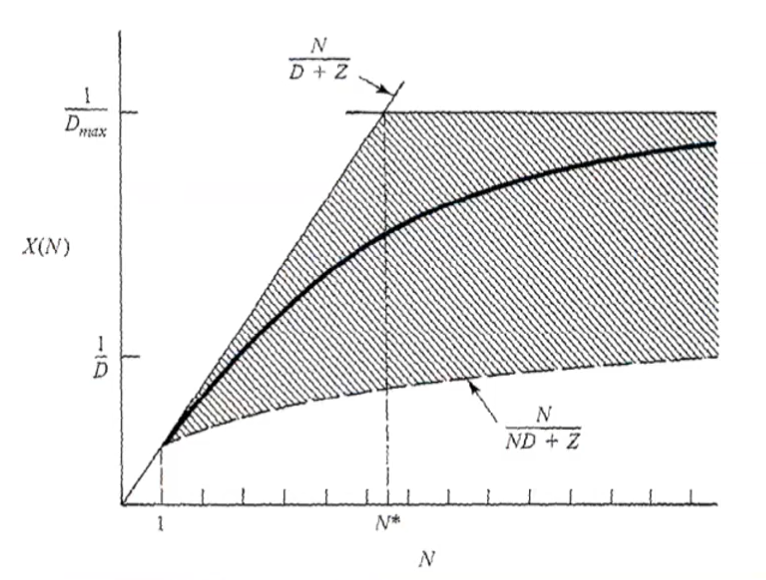

Closed models

We can distinguish when the system is operating at “light load” from an heavy load.

- Light load:

- Heavy load: