Learning Theory

Training error and true error are two different types of errors:

- Training error: error rate which measures how well the model fits the training data

- True error: it refers to the average prediction error over all possible input values in an unseen dataset.

We cannot know the true error because we don’t know the true distribution of data that the algorithm will work on. Instead, we can measure the model performance on a known set of training data (the “empirical” risk) and try to make an estimation of the true error.

No free lunch theorem

No Free Lunch theorems are a large class of theorems. Here we will analyze only the “main” NFL theorem only for the binary classification problem:

“Independently from the learner, any technique in average will have an accuracy of 0.5, that in a binary classification problem is exactly the same accuracy of random guessing. Any learner cannot perform better than random guessing

Takeaways:

- NFL theorem states that an algorithm can perform poorly and not consistently outperform random guessing on average. However, this is based on the assumption that all possible hypotheses are equally likely to occur and there is no regularity in the world. Actually in real-world applications, some samples have lower probabilities of occurring, so specific algorithms tailored to specific tasks are needed and possible.

- There is no such thing as a winner-takes-all in ML! In ML we always operate under some assumptions! It is important to understand that there are no guarantees of success in all scenarios.

- While we can find algorithms that perform well on specific tasks, they may not generalize to other tasks.

- It is always possible to find data sets where an algorithm performs poorly.

VC Dimension

Some concepts:

- Dichotomy: given a set of points a dichotomy is a partition of that set in 2 disjunct subsets.

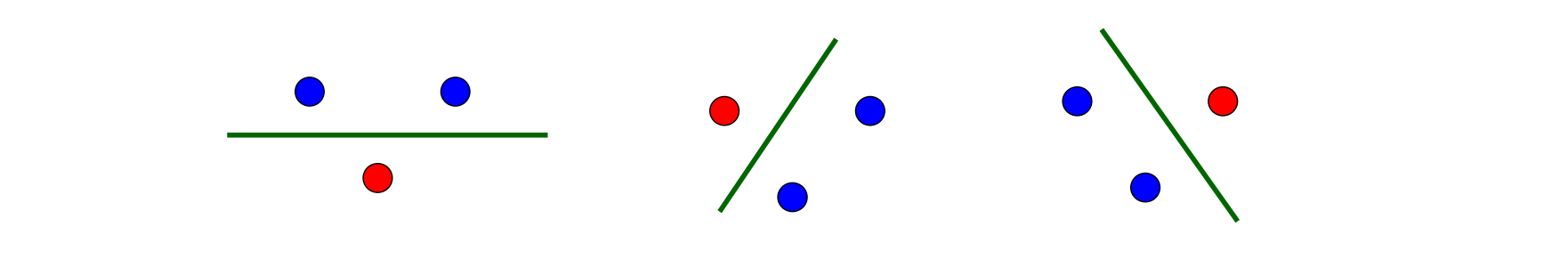

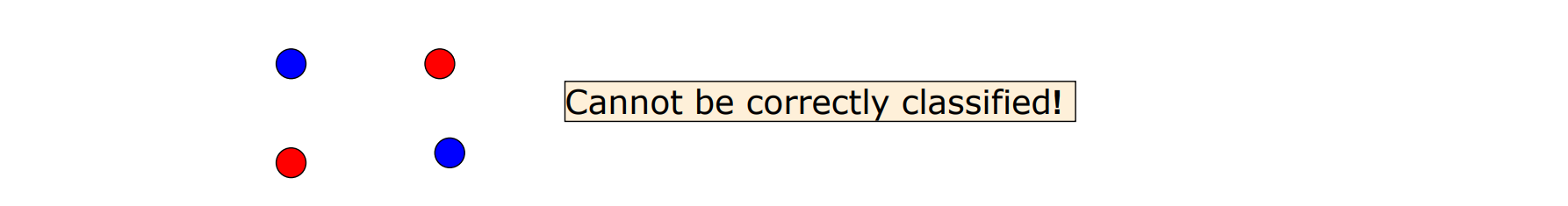

- Shattering: we say that a set is shattered by an hypothesis space if and only if for every dichotomy in there exist some hypotheses in consistent with this dichotomy. Basically is shattering if it is able to perfectly classify all the examples in independently of how they are labelled.

- : The Vapnik-Chervonenkis dimension is the cardinality of the largest set of instances shattered by .

Besides the formalism the more complex is your hypothesis space the more you are likely that you’re be able to shatter an higher cardinality set of instances, since “you can draw complex boundaries” to classify the points.

A linear classifier is a model that tries to draw a straight line (or plane, or hyperplane) to separate two groups of data points. In a 2D input space, this means we’re trying to draw a line that separates one group of points from another. The VC dimension for this linear classifier in 2D is 3. This means that if we have up to three points in our dataset, we can always find some way to draw a line between them so that all the points on one side are in one group and all the points on the other side are in another group.

It turns out that the VC dimension for a linear classifier in input space is simply .

So if we have an -dimensional dataset and use a linear classifier model () then according to VC theory, there will always exist some combination of weights such that any set of up to data points can be perfectly classified into two groups using this model. Other examples of are:

- Neural Networks: is the number of parameters

- 1-Nearest Neighbors: is

- SVM with Gaussian Kernel: is

In a exercitation the prof showed that the of a particular hyphotesis space is at least a given number you have to find at least one set of instances of cardinality (one possible location of the points) and show that it can be shattered by , which means to test all possible dichotomies (aka all possible assignements of classes) it’s possible to correctly classify the points. Vice versa to prove that is lower than is slightly more difficult since we have to prove that for all set of instances can’t be shattered by .

The VC dimension is at least k if the hypothesis space shatters at least one subset of cardinality k in the instance space.

PAC-Learning

Probably Approximately Correct is a statistical tool to assess the quality/performance of a learning model given information from the samples (either a test set (simplest scenario) or train set). Some definitions:

- A concept class is a set of possible concepts that our model can choose from when making predictions. It represents all possible functions or mappings that could explain the observed data.

- A class of possible target concepts is PAC-learnable by a learner using (the hypothesis space) if for all , for any distribution (such that ), and (such that ), will with a probability at least output a hypothesis such that error , in time that is polynomial in , and size(c).

- A sufficient condition to prove PAC-learnability is proving that a learner requires only a polynomial number of training examples and processing time for each example is polynomial. “If we have a concept class that is PAC-learnable it means that is not hard to learn”.

- The version space is the subset of the hypothesis space

Hthat contains all hypotheses with 0 training error. In practice usually we need to work outside of the version space. - We will use the Hoeffding Bound which is is a tool to build confidence interval: .

- The empirical risk minimizer with the loss function.

We exploit the Hoeffding Bound to evaluate the true loss of the empirical risk minimizer starting from:

- the test set: it’s independent from the training set so the test loss is an unbiased estimator for the true loss.

- the train set: obviously will provide a negatively biased estimator for the true loss.

Using the Test Set

Under the assumption of bounded loss : with probability and where:

- is the true error rate (risk) of our model, which measures how well it performs on new, unseen data points.

- is the empirical error rate (training loss) of our model, which measures how well it fits to a given set of training data.

- can be thought as an upper bound on how much our predictions can deviate from their true values.

- represents the size of our test set, i.e., how many samples we have available to evaluate our model’s performance on new data points.

- represents confidence level or probability threshold we want to achieve with respect to this inequality.

Using the Training Set

We distinguish:

- consistent learners which have zero training error

- agnostic learning is when: if and that the learner will not always output a hypothesis such that but will output a hypothesis such that .

In case of binary classification (finite hypothesis space):

- Finite hypothesis space and consistent learning always):

- Finite hypothesis space and agnostic learning possibly):

When we moving to an infinite hypothesis space, like for example in a linear regression case (where the output is an real number) we have to use the notion of VC dimension, which in some away accounts for the complexity of the hypothesis space. So, in case of infinite hypothesis space and agnostic learning possibly):

PAC takeaways

So basically, this theoretical formula confirms what so far we only said empirically by looking at the different techniques:

- Larger hypotheses space implies larger bound (variance)

- Increasing implies reduced bound (variance)

- Large : low bias, high variance

- Small : high bias, low variance

- There is relationship between train error and prediction error (true error):

- Close relationship between the two when under-fitting

- Relationship lost when over-fitting